How to uplift your A/B testing by 15%

The problem with A/B testing

Over the last few years, A/B testing has become an established and mature discipline for most e-commerce, online conversion and digital experience teams across industries. I am often approached by these teams asking if we can identify why a particular A/B test has failed to deliver the expected improvements. Often these tests have been targeted using information from analytic systems that identify pages that suffer from high bounce rates or visitor drop-off. And, the tests have been created based on assumptions about what could be causing problems on those pages.

The big problem is that without seeing real users interacting with these pages to observe their behavior first hand, it is just guess work that has driven the A/B testing activity. Ultimately, that means the tests fail.

Use struggle scores to target A/B testing

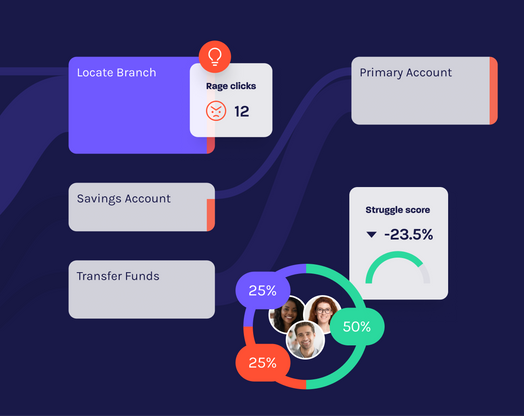

The clients that see the greatest success use our unique struggle score to identify the biggest sticking points on their website or mobile app, and then target these areas for improvement with optimizations that are developed and implemented after comprehensive A/B testing.

Use session replay and form analytics to identify potential A/B tests

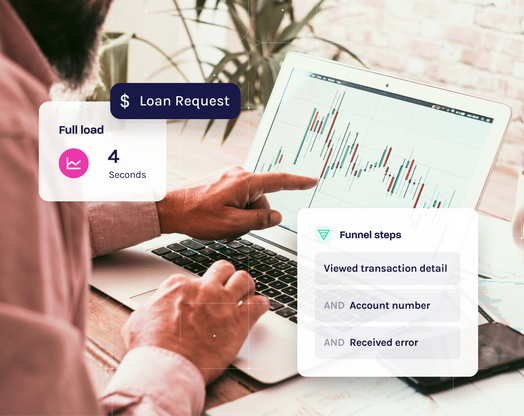

The Glassbox digital experience analytics platform enables you to identify conversion issues as you watch individual recordings at these points of struggle. Understanding user behavior in these moments means you are better able to understand the underlying root causes. In turn, this means you are better able to identify potential solutions that can be A/B tested before full deployment.

Using form analytics to review field level drop-off is essential too. This is a fast way to understand exactly where on a web form users drop off.

Running tests based on the hard evidence collected from real user sessions takes the guesswork out of A/B testing.

Integrate Glassbox with your A/B testing tool to unlock extra value

I also recommend reviewing your actual tests within Glassbox once they have run. Although your testing tool will tell you which variant won, it won’t tell you why. Watching the sessions that sit behind the test and the control, it can quickly become obvious that the initial issue has not been resolved and more work is needed.

To do this, you should integrate Glassbox with your A/B testing solution. We have easy-to-add integrations with several leading tools. These integrations let you segment Glassbox data for individual test variants and to replay individual sessions that relate to each experiment.

Retailer reaps the benefits

Over the last few weeks, I’ve helped a leading retailer with their A/B testing program and activities. One project focused on their registration process within the checkout journey, where the retailer had previously conducted an A/B test that yielded a lower conversion rate and a 2% increase in the number of customers seeing errors. Once we applied the approach outlined above, they saw an improvement of 15% in completion rates for that same page.